Jinbo Wang

HUMN270

Prof. Faull

Homework 2

The corpus I chose for this assignment is part of my corpus for final project. For this assignment, I collected public speeches made by Barack Obama during 2008. All speeches are found at American Rhetoric. The website claims that most of materials are for educational purpose, which I think is free to use. The corpus can be classified in numerous ways, such as chronicle and geographical, and also can be further subdivided under each mechanism. Each text in corpus contains 2000 word in average and there are 17 of them in total. Most of them are addressing topics of presidential election and public affairs in the US.

The result I got from Voyant is pretty straightforward and easy understanding:

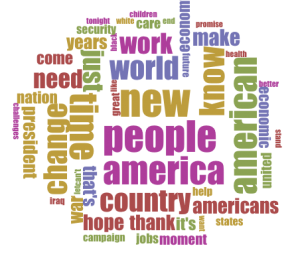

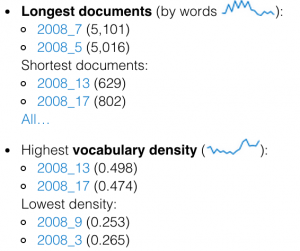

Voyant first create a word cloud, in which words that appear more frequently in the source text are more prominent. Although personally I think word cloud is mostly for aesthetic purpose, viewers can still grasp some core value out of corpus. For example, in this word cloud, words such “new”, “hope” and “change” are relatively dominant, which meets the political agenda of Barack Obama. Besides the word cloud, Voyant also provides other powerful functionality. It also provides various linguistic statistics of corpus, such as relative word frequency, vocabulary density and etc. Also I found some patterns can be drawn easily from statistics but hard to understand at the first glance. Following is a part of the linguistic statistics collected from my corpus:

We can easily draw the conclusion that shorter documents used to have richer vocabulary density. Personally I do not think there is an connection within, but certainly it is interesting to investigate.

Comparing with Voyant, Jigsaw put most attention on investigating relationships of entities, such as location and people, appeared among corpus. Due to the nature of Jigsaw, which is designed for intelligence analysis, it is relatively more subjective compared with Voyant. It adopts machine learning technologies to help people understand relations hiding beneath the facts, which provides more complicated analysis than Voyant. For my corpus, it helps me to realize attitudes that Barack Obama towards different public affairs. For example, this is the sentiment analysis of 17 texts from my corpus :

In this visualization, red suggests negative attitude and blue suggest positive attitude. For the left most text, Obama was talking about climate change. Jigsaw decides it is negative due to the appearance of words such as “combate”, “confront” , which indicate the seriousness of the speech evidently. In the contrary, the rightmost one is the “yes we can”speech, undoubtedly the most excited speech that Obama has given.

Due to the nature of Jigsaw, it helps me to study Obama’s speech from demographic and geographic aspects.It is possible that when speaking to different group of people, Barack Obama may use different set of vocabulary, and it is interesting to see if it is just an coincidence or he really means to it. From the lab instruction written by Prof, Faull, Jigsaw also has other promising functionalities that reveals the inner relationship and meaning of each entities, the version I have keep crushing on some of the functionalities when I am testing it, which is a pity that I can not fully explore the functionality of Jigsaw now.

In general, I think both Voyant and Jigsaw can be put into good uses for my corpus. Due to the nature of my corpus, I am interested in investigating both the language use of Obama and also his attitude towards public affairs. For Voyant, it can help me to perform lexical analysis. It reveals vocabulary usage from various way, which can help me his language use in different scenario. Jigsaw, on the other hand, provides deeper analysis of entities, which help me better understand the corpus in another way.

For both of the platform, they are like puzzles, only providing limited clue at a time, acquiring user to draw conclusions out of it. Certainly for human brains, we can not process massive amount of data at the same time. Such platforms take advantage of both computing power and visualization ability of computer, revealing things we usually ignored. They are especially powerful to differentiate subtle connections and hidden relationships among huge amount texts, digging up things that are impossible to find by human. Sometimes we might find some ambiguous and plausible conclusion by our self, but for most of time we can not find enough clue to back our rationale. Both of the platforms help us combine subtle clues into more obvious, stronger evidence to validate potential conclusions.

Leave a Reply